🌱 Another ChatGPT Moment

This time for simulation, rendering and robotics

In this issue:

Simulation’s ChatGPT moment

The new era of test-time compute

A company with no humans

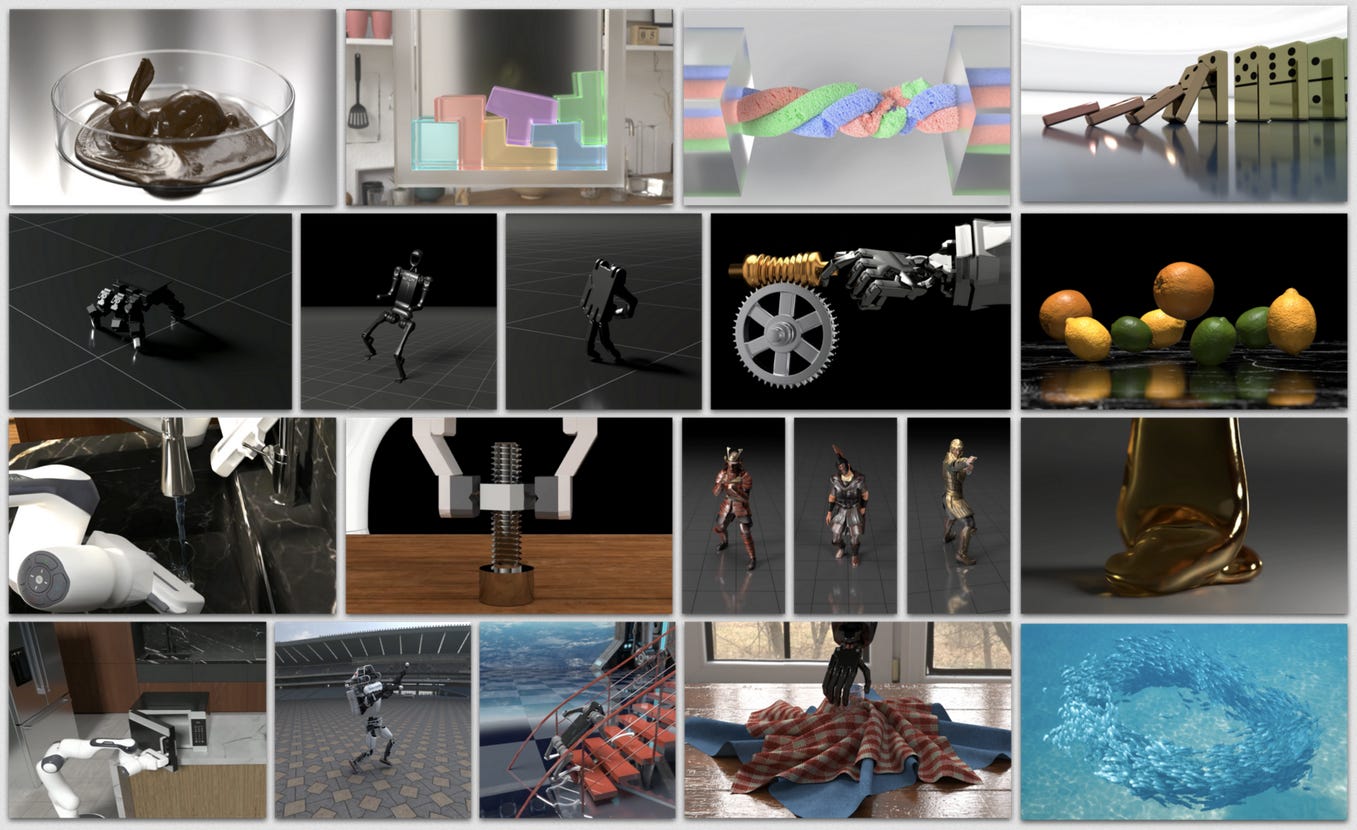

1. Genesis: A Generative and Universal Physics Engine for Robotics and Beyond

Watching: Genesis (project)

What problem does it solve? Robotics and Embodied AI have seen a lot of progress in recent years, but the lack of comprehensive simulation platforms has been a major bottleneck. Existing solutions often focus on specific aspects like physics simulation, rendering, or data generation, but fail to provide a unified framework that seamlessly integrates all these components. This fragmentation makes it challenging for researchers and developers to efficiently build and test their models in realistic environments.

How does it solve the problem? Genesis tackles this issue by offering a holistic platform that combines a powerful physics engine, a user-friendly robotics simulation environment, a photo-realistic rendering system, and a generative data engine. The physics engine is designed to simulate a wide range of materials and phenomena, ensuring accurate and consistent behavior across different scenarios. The robotics simulation platform provides a lightweight and fast interface for building and testing models, while the rendering system enables the creation of visually realistic environments. Perhaps most notably, the generative data engine can transform natural language descriptions into various data modalities, including videos, camera parameters, character motion, and even deployable real-world policies.

What's next? The gradual rollout of the generative framework will be a significant milestone, enabling users to effortlessly create rich, diverse, and physically accurate datasets for training and testing their models. Additionally, the open-source nature of the physics engine and simulation platform will foster a vibrant community of developers and researchers, leading to rapid advancements and innovations in the field of robotics and embodied AI. As the platform matures, it has the potential to become the go-to solution for anyone working on physical AI applications, from academia to industry.

2. Scaling test-time compute with open models

Watching: Test-time compute (blog/repo)

What problem does it solve? The scaling of train-time compute has been the dominant paradigm for improving the performance of large language models (LLMs). However, this approach is becoming increasingly expensive, with billion-dollar clusters already on the horizon. Test-time compute scaling offers a complementary approach, allowing models to "think longer" on harder problems without relying on ever-larger pretraining budgets.

How does it solve the problem? Recent research from DeepMind shows that test-time compute can be scaled optimally through strategies like iterative self-refinement or using a reward model to perform search over the space of solutions. By adaptively allocating test-time compute per prompt, smaller models can rival—and sometimes even outperform—their larger, more resource-intensive counterparts. The authors have implemented DeepMind's recipe to boost the mathematical capabilities of open models at test-time and developed an unpublished extension called Diverse Verifier Tree Search (DVTS) to improve diversity and deliver better performance, particularly at large test-time compute budgets.

What's next? The authors from HuggingFace have released a lightweight toolkit called Search and Learn for implementing search strategies with LLMs, built for speed with vLLM. The results show that tiny 1B and 3B Llama Instruct models can outperform their much larger 8B and 70B siblings on the challenging MATH-500 benchmark if given enough "time to think." This approach enables improving the performance of LLMs without relying solely on increasing model size and pretraining budgets. Further research and development in this area could lead to more efficient and cost-effective ways of scaling LLM performance.

3. TheAgentCompany: Benchmarking LLM Agents on Consequential Real World Tasks

Watching: TheAgentCompany (paper/repo)

What problem does it solve? As Large Language Models (LLMs) become more capable, there is growing interest in understanding their potential to automate or assist with real-world professional tasks. However, evaluating LLMs in realistic work environments is challenging due to the complexity and variety of tasks involved. TheAgentCompany benchmark aims to provide a standardized way to assess the performance of AI agents in a simulated software company setting, enabling researchers and industry to better understand the current capabilities and limitations of these systems.

How does it solve the problem? TheAgentCompany benchmark creates a self-contained environment that mimics a small software company, complete with internal websites, data, and a range of tasks typically performed by digital workers. These tasks include browsing the web, writing code, running programs, and communicating with coworkers. By providing a standardized testing environment, the benchmark allows for a more accurate and comparable evaluation of AI agents' abilities to autonomously complete work-related tasks. The authors test baseline agents powered by both closed API-based and open-weights language models to establish a baseline for current performance.

What's next? The results from TheAgentCompany benchmark suggest that while current AI agents can autonomously complete a portion of simpler tasks, more complex and long-horizon tasks remain challenging. However, it will be important to regularly reassess their performance using benchmarks like TheAgentCompany to track progress and identify areas for further development. Additionally, as AI agents become more capable, it will be crucial to consider the potential economic and social implications of increased automation in the workplace, and to develop policies that ensure a smooth transition and protect worker well-being.

Papers of the Week:

Bridging AI and Science: Implications from a Large-Scale Literature Analysis of AI4Science

Superhuman performance of a large language model on the reasoning tasks of a physician

Proposer-Agent-Evaluator(PAE): Autonomous Skill Discovery For Foundation Model Internet Agents

How to Choose a Threshold for an Evaluation Metric for Large Language Models

Rethinking Uncertainty Estimation in Natural Language Generation