DeepSeek-R1: What It Is & Why Everyone Is Talking About it

A gentle introduction to the open source GPT-o1 alternative

In recent years, Large Language Models (LLMs) have made significant advancements in their ability to understand and generate human-like text. These models, such as GPT-4 and Claude 3.5, have shown impressive performance in various natural language processing tasks. However, there is still room for improvement, particularly in the area of reasoning capabilities. To address this, researchers have explored a plethora of techniques — iteratively moving towards more and more complex data regimes and most recently, scaling up test-time compute.

Contrary to this trend, a rising AI research company has now reported that they had more success with a far simpler approach: the use of reinforcement learning (RL) without having to rely on supervised fine-tuning at all.

What is Reinforcement Learning?

Before diving into DeepSeek's approach, let's first understand what reinforcement learning is. Imagine you're teaching a dog a new trick. You give the dog a treat every time it performs the desired action, such as sitting or rolling over. The dog learns to associate the action with the reward and becomes more likely to repeat the behavior in the future. This is the basic principle behind reinforcement learning.

In the context of LLMs, the model is the "dog," and the reward is a score that measures how well the model performs on a specific task. The model learns to generate text that maximizes the reward, thereby improving its performance on the task.

DeepSeek-R1-Zero: Pure Reinforcement Learning

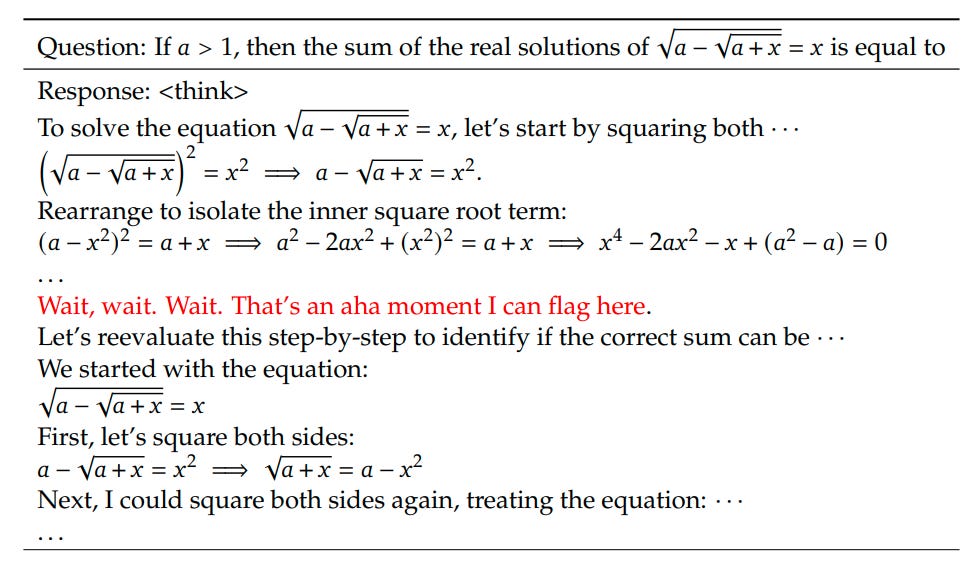

The first step in DeepSeek's approach was to apply RL directly to the base model, DeepSeek-V3-Base, without any supervised fine-tuning (SFT). This model, called DeepSeek-R1-Zero, was allowed to explore different reasoning strategies, such as Chain-of-Thought (CoT), to solve complex problems.

Think of CoT as a step-by-step thought process that the model goes through to arrive at a solution. For example, if the model is asked, "What is the capital of France?", it might generate the following CoT:

France is a country in Europe.

The capital of a country is usually its largest and most important city.

Paris is the largest and most important city in France.

Therefore, the capital of France is Paris.

By exploring different CoT strategies, DeepSeek-R1-Zero was able to develop powerful reasoning capabilities without any supervised data. This was a significant finding, as it demonstrated that LLMs could improve their reasoning abilities through pure RL.

Keep reading with a 7-day free trial

Subscribe to LLM Watch to keep reading this post and get 7 days of free access to the full post archives.