🎁 Meta Reveals New AI Architecture

And how graph-enhanced agents can improve RAG

In this issue:

How Meta wants to take LLMs to the next level

A smaller, more transparent o1 alternative

Graph agents improving RAG

1. Large Concept Models: Language Modeling in a Sentence Representation Space

Watching: LCMs (paper)

What problem does it solve? Current Large Language Models (LLMs) operate at the token level, processing input and generating output word by word. This contrasts with how humans process information, utilizing higher levels of abstraction beyond single words. By introducing a new architecture that operates on explicit higher-level semantic representations called "concepts," this research aims to bridge the gap between human-like understanding and the current token-based approach of LLMs.

How does it solve the problem? The proposed "Large Concept Model" uses a language- and modality-agnostic representation of ideas or actions called "concepts." In this study, a concept is assumed to correspond to a sentence, and the SONAR sentence embedding space, which supports up to 200 languages in both text and speech modalities, is used. The model is trained to perform autoregressive sentence prediction in the embedding space using various approaches, including MSE regression, diffusion-based generation, and models operating in a quantized SONAR space.

What's next? The Large Concept Model demonstrates impressive zero-shot generalization performance across many languages, outperforming existing LLMs of the same size. Future work could explore more sophisticated definitions of "concepts" beyond sentences and investigate the model's performance on a wider range of tasks. Additionally, scaling up the model size and training data could potentially lead to even more impressive results.

2. Mulberry: Empowering MLLM with o1-like Reasoning and Reflection via Collective Monte Carlo Tree Search

Watching: Mulberry (paper)

What problem does it solve? While Large Language Models (LLMs) have shown impressive performance on a wide range of tasks, their reasoning abilities are still limited. They often struggle to provide step-by-step explanations for their answers, which is crucial for building trust and understanding in AI systems. Mulberry aims to address this issue by developing an MLLM that can generate intermediate reasoning steps to arrive at the final answer.

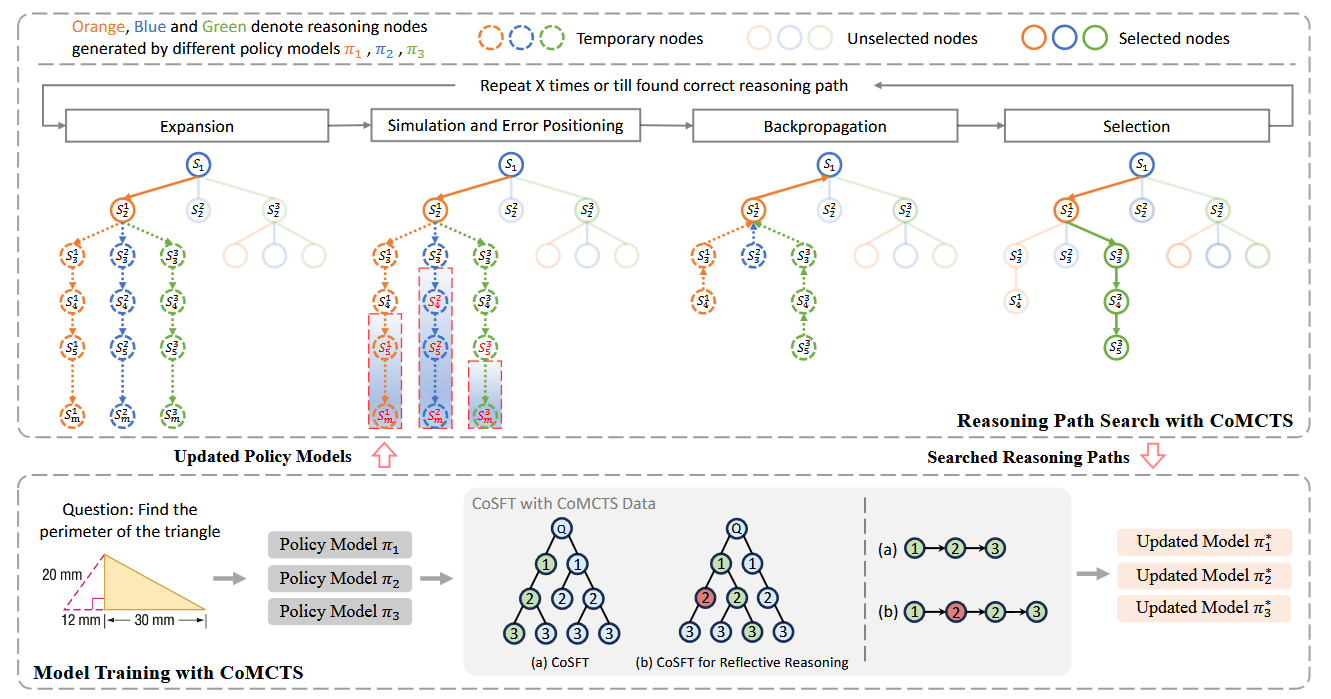

How does it solve the problem? Mulberry introduces a novel learning-to-reason method called Collective Monte Carlo Tree Search (CoMCTS). CoMCTS leverages the collective knowledge of multiple models to collaboratively search for effective reasoning paths. It involves four iterative operations: Expansion, Simulation and Error Positioning, Backpropagation, and Selection. By using CoMCTS, the authors constructed Mulberry-260k, a multimodal dataset with explicit reasoning nodes for each question. This dataset is then used to train Mulberry, a series of MLLMs with step-by-step reasoning and reflection capabilities.

What's next? The development of (M)LLMs with verbose reasoning steps mighr enable AI systems to provide more transparent and interpretable explanations for their decisions. This is particularly important in domains such as healthcare, finance, and legal systems, where trust and accountability are crucial. We can expect to see more research focused on improving the reasoning capabilities of (M)LLMs and developing datasets that facilitate this process. Additionally, the integration of multimodal data, as demonstrated in Mulberry-260k, could lead to more comprehensive and robust reasoning systems.

3. GeAR: Graph-enhanced Agent for Retrieval-augmented Generation

Watching: GeAR (paper)

What problem does it solve? Retrieval-augmented generation (RAG) systems rely on effective document retrieval to provide relevant information for generating accurate responses. However, conventional sparse or dense retrievers face challenges in multi-hop retrieval scenarios, where the required information is spread across multiple documents. This limitation hinders the performance of RAG systems in complex question answering tasks that require reasoning over multiple pieces of information.

How does it solve the problem? GeAR addresses the limitations of conventional retrievers in multi-hop scenarios through two key innovations. First, it introduces graph expansion, which enhances any base retriever, such as BM25, by leveraging the LLM to synchronize information from passages with triples and expand the graph by exploring diverse beams of triples that link multi-hop contexts. This strategy allows GeAR to effectively retrieve relevant information spread across multiple documents. Second, GeAR incorporates an agent framework that utilizes the multi-hop contexts returned by the graph retriever to construct a gist memory, which summarizes the retrieved information across iterations. This gist memory enables the LLM to reason over the collected information and generate accurate responses.

What's next? Future research could explore the application of graph-based retrievers and agent frameworks to other complex natural language processing tasks that require reasoning over multiple pieces of information. Additionally, the synergy between the graph retriever and the LLM within the GeAR framework highlights the potential for further improvements by leveraging the capabilities of large language models to guide the retrieval process. We can expect to see more advanced techniques that enable effective reasoning over large amounts of information, leading to more accurate and informative responses.

Papers of the Week:

B-STaR: Monitoring and Balancing Exploration and Exploitation in Self-Taught Reasoners

Better Think with Tables: Leveraging Tables to Enhance Large Language Model Comprehension

System-2 Mathematical Reasoning via Enriched Instruction Tuning

Awesome