🧪The First Fully AI-Designed Drug... Almost

How AI-human collaboration could change scientific research forever

In this issue:

AI agents designed new nanobodies against SARS-CoV-2

Semantic backpropagation for AI agents

Amazon emters the foundation model arena

1. The Virtual Lab: AI Agents Design New SARS-CoV-2 Nanobodies with Experimental Validation

Watching: Virtual Lab (paper)

What problem does it solve? Interdisciplinary research is becoming increasingly important for tackling complex scientific problems. However, assembling a team of experts from diverse fields can be challenging due to limited access and resources. Large Language Models (LLMs) have shown promise in assisting researchers across various domains by answering scientific questions. The Virtual Lab takes this a step further by creating an AI-human collaboration that simulates an interdisciplinary research team, enabling sophisticated scientific research without the need for a physical team of experts.

How does it solve the problem? The Virtual Lab consists of an LLM principal investigator agent that guides a team of LLM agents with different scientific backgrounds, such as a chemist agent, a computer scientist agent, and a critic agent. The human researcher provides high-level feedback to the team. The research is conducted through a series of team meetings, where the agents discuss the scientific agenda, and individual meetings, where each agent accomplishes specific tasks related to their expertise. This setup allows for a structured and efficient approach to interdisciplinary research, leveraging the knowledge and problem-solving abilities of LLMs.

What's next? The successful application of the Virtual Lab in designing nanobody binders for SARS-CoV-2 variants demonstrates its potential for real-world scientific discovery. Furthermore, the integration of additional AI tools and databases could enhance the capabilities of the Virtual Lab, allowing for even more advanced and efficient research. Environments like the Virtual Lab may become a valuable tool for researchers and institutions looking to conduct interdisciplinary research without the need for extensive resources or physical collaboration.

2. How to Correctly do Semantic Backpropagation on Language-based Agentic Systems

Watching: Semantic Backpropagation (paper/code)

What problem does it solve? Language-based agentic systems, such as chatbots and virtual assistants, have made significant strides in tackling real-world challenges. However, optimizing these systems often requires extensive manual effort. Recent research has shown that representing these systems as computational graphs can enable automatic optimization. Despite this progress, current Graph-based Agentic System Optimization (GASO) methods struggle to properly assign feedback to individual system components based on the overall system output.

How does it solve the problem? The researchers formalize the concept of semantic backpropagation using semantic gradients. This approach generalizes key optimization techniques, including reverse-mode automatic differentiation and TextGrad, by leveraging the relationships between nodes with a common successor. Semantic gradients provide directional information on how changes to each component of an agentic system can improve the system's output. The proposed method, called semantic gradient descent, effectively solves GASO problems by utilizing these gradients.

What's next? The researchers have demonstrated the effectiveness of their approach on both BIG-Bench Hard and GSM8K datasets, outperforming existing state-of-the-art methods for GASO problems. They also conducted a detailed ablation study on the LIAR dataset, showcasing the parsimonious nature of their method. To facilitate further research and adoption, the authors have made their implementation publicly available. This work lays the foundation for more efficient and automated optimization techniques, potentially reducing the manual effort required to optimize these systems.

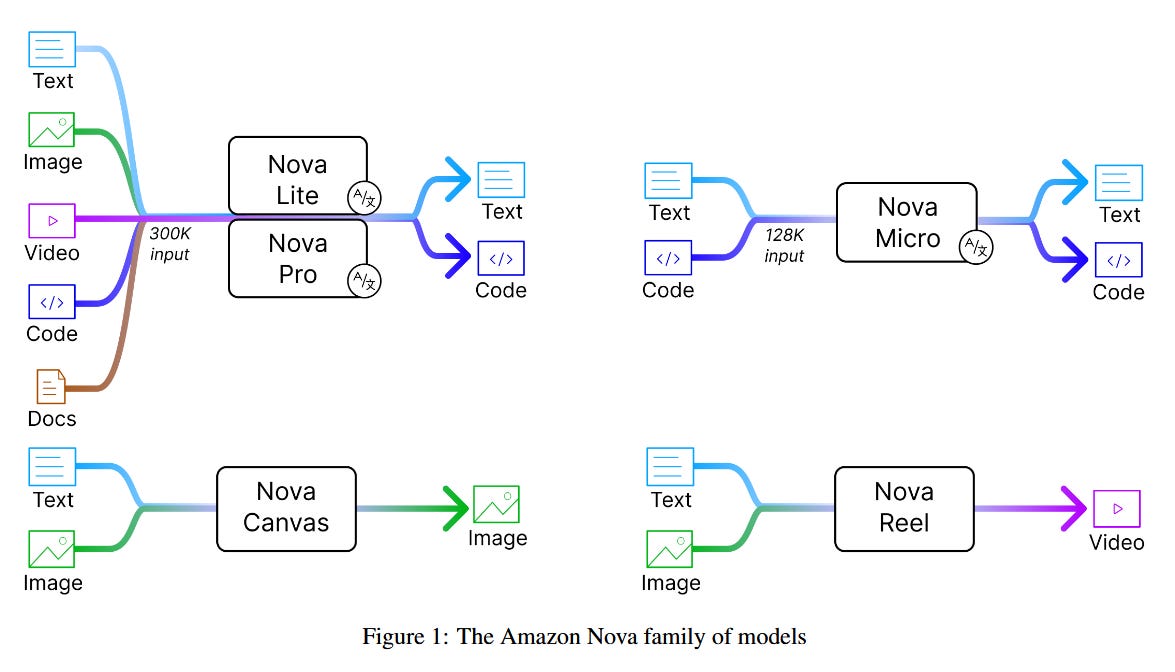

3. The Amazon Nova Family of Models: Technical Report and Model Card

Watching: Amazon Nova (paper)

What problem does it solve? Amazon Nova is a suite of foundation models that aims to deliver state-of-the-art performance across a wide range of tasks while maintaining a strong focus on cost-effectiveness and efficiency. The models cater to various use cases, from multimodal processing (Nova Pro and Nova Lite) to low-latency text generation (Nova Micro) and high-quality image and video generation (Nova Canvas and Nova Reel). By offering a diverse set of models with different capabilities and price points, Amazon Nova seeks to address the growing demand for powerful, versatile, and accessible AI solutions.

How does it solve the problem? To ensure the quality and reliability of their models, Amazon employed a rigorous and unbiased evaluation process. They outsourced the annotation collection to a third-party vendor, providing detailed guidelines and examples to maintain consistency across evaluations. The vendor's annotators underwent training using expert-provided examples, and their work was subject to spot checks to ensure accuracy. Additionally, Amazon used a consensus voting system, where three different evaluators assessed each video comparison, and the final outcome was determined by majority vote. This approach helps reduce individual biases and enhances the robustness of the evaluation. The performance of Amazon Nova Reel was compared against other state-of-the-art models, such as Gen3 Alpha by Runway ML and Luma 1.6 by Luma Labs, using a curated prompt set and reporting win, tie, and loss rates across various dimensions.

What's next? As Amazon continues to develop and refine its Nova foundation models, we can expect further improvements in performance, efficiency, and cost-effectiveness. The company's commitment to responsible AI development, customer trust, security, and reliability suggests that they will continue to prioritize these aspects as they expand their offerings. In the future, we may see additional models tailored to specific industries or use cases, as well as advancements in multimodal integration and cross-domain adaptation.

Papers of the Week:

ICLERB: In-Context Learning Embedding and Reranker Benchmark

Beyond Examples: High-level Automated Reasoning Paradigm in In-Context Learning via MCTS

Critical Tokens Matter: Token-Level Contrastive Estimation Enhances LLM's Reasoning Capability